At the risk of sounding too optimistic, 2019 might be the year of the private web browser.

In the beginning, browsers were a cobbled together mess that put a premium on making the contents within look good. Security was an afterthought — Internet Explorer is no better example — and user privacy was seldom considered as newer browsers like Google Chrome and Mozilla Firefox focused on speed and reliability.

Ads kept the internet free for so long but with invasive ad-tracking at its peak and concerns about online privacy — or lack of — privacy is finally getting its day in the sun.

Chrome, which claims close to two-thirds of all global browser market share, is the latest to double down on new security and privacy features after Firefox announced new anti-tracking blockers last month, Microsoft’s Chromium-based Edge promised better granular controls to control your data, and Apple’s Safari browser began preventing advertisers from tracking you from site to site.

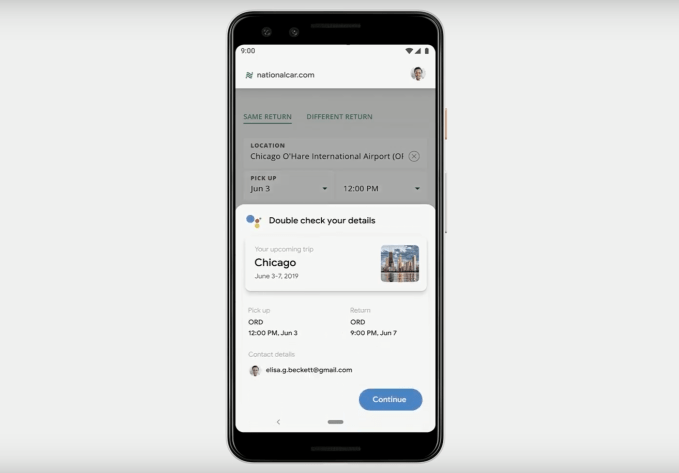

At Google’s annual developer conference Tuesday, Google revealed two new privacy-focused addition: better cookie controls that limit advertisers from tracking your activities across websites, and a new anti-fingerprint feature.

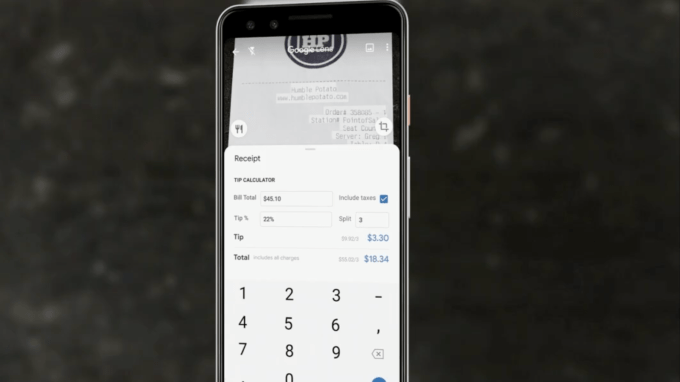

In case you didn’t know: cookies are tiny bits of information left on your computer or device to help websites or apps remember who you are. Cookies can keep you logged into a website, but can also be used to track what a user does on a site. Some work across different websites to track you from one website to another, allowing them to build up a profile on where you go and what you visit. Cookie management has long been an on or off option. Switching cookies off mean advertisers will find it more difficult to track you across sites but it also means websites won’t remember your login information, which can be an inconveniences.

Soon, Chrome will prevent cross-site cookies from working across domains without obtaining explicit consent from the user. In other words, that means advertisers won’t be able to see what you do on the various sites you visit without asking to track you.

Cookies that work only on a single domain aren’t affected, so you won’t suddenly get logged out.

There’s an added benefit: by blocking cross-site cookies, it makes it more difficult for hackers to exploiting cross-site vulnerabilities. Through a cross-site request forgery attack, it’s possible in some cases for malicious websites to run commands on a legitimate site that you’re logged into without you knowing. That can be used to steal your data or takeover your accounts.

Going forward, Google said it will only let cross-site cookies travel over HTTPS connections, meaning they cannot be intercepted, modified or stolen by hackers when they’re on their way to your computer.

Cookies are only a small part of how users are tracked across the web. These days it’s just as easy to take the unique fingerprints of your browser to see which sites you’re visiting.

Fingerprinting is a way for websites and advertisers of collecting as much information about your browser as possible, including its plugins and extensions, and your device, such as its make, model, and screen resolution, which creates a unique “fingerprint that’s unique to your device. Because they don’t use cookies, websites can look at your browser fingerprint even when you’re in incognito mode or private browsing.

Google said — without giving much away as to how — it “plans” to aggressively work against fingerprinting, but didn’t give a timeline of when the feature will roll out.

Make no mistake, Google stepping up to the privacy plate, following in the footsteps of Apple, Mozilla and Microsoft. Now that Google’s on board, that’s two-thirds of the internet set to soon benefit.